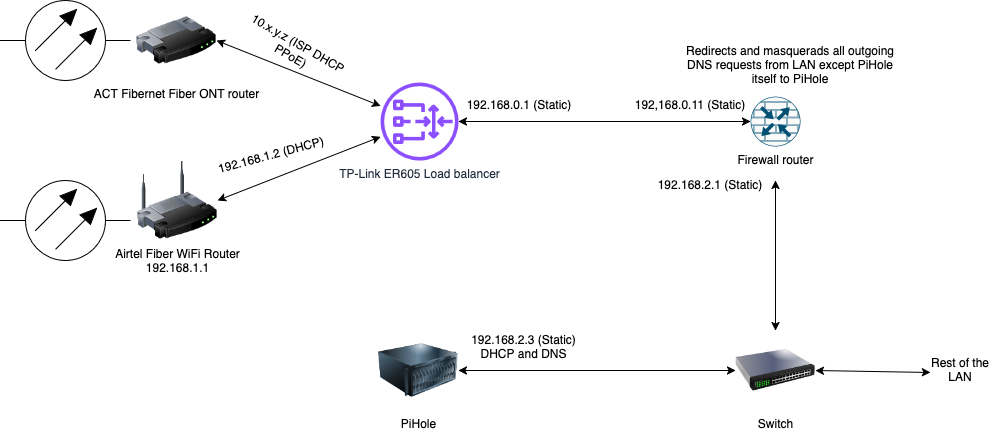

In my previous post, I had explained how I set up an N100 mini PC as an OPNsense firewall in my home network. One of the main purposes of having such a firewall was to (transparently) redirect all outgoing DNS requests to the local Pi-hole instance.

I have a Pi-hole instance serving DHCP and DNS in my LAN and most client devices respect the DNS settings provided by the DHCP server. But Android devices (I have an Android TV at home) ignore it and choose to use the Google DNS servers instead. So I need this redirect in place to have network-level ad-blocking working.

In the previous firewalls and gateway devices, I had to set up a DNAT rule to redirect all outgoing traffic to port 53 except for the traffic originating from the Pi-hole instance to the Pi-hole instance’s address on port 53. To prevent “unexpected source” errors that can happen after adding the DNAT rule, I had to enable masquerading the redirected packets to have the firewall/gateway device’s IP address as the source IP address using SNAT.

Now OPNsense is built on top of FreeBSD, which has its own pf firewall and that works differently from the Linux iptables firewall. So this is what I had to do to get the DNS redirect working.

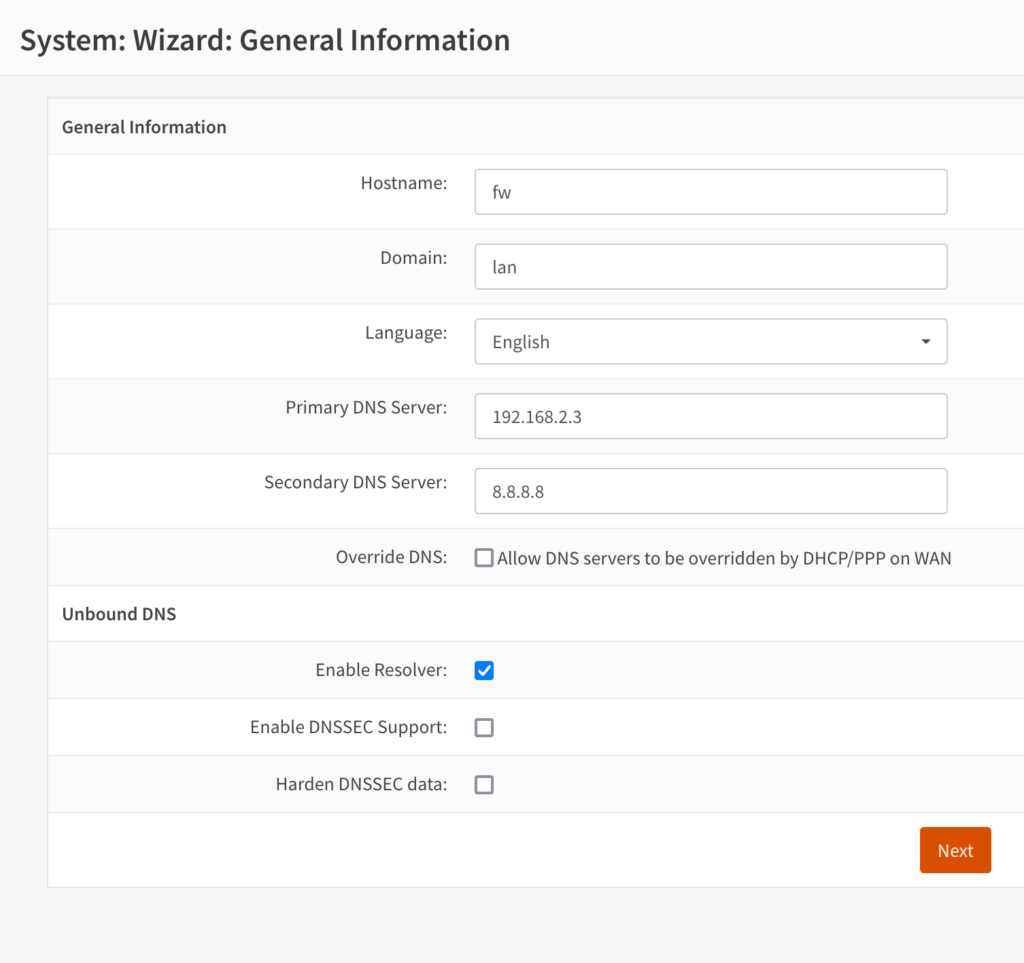

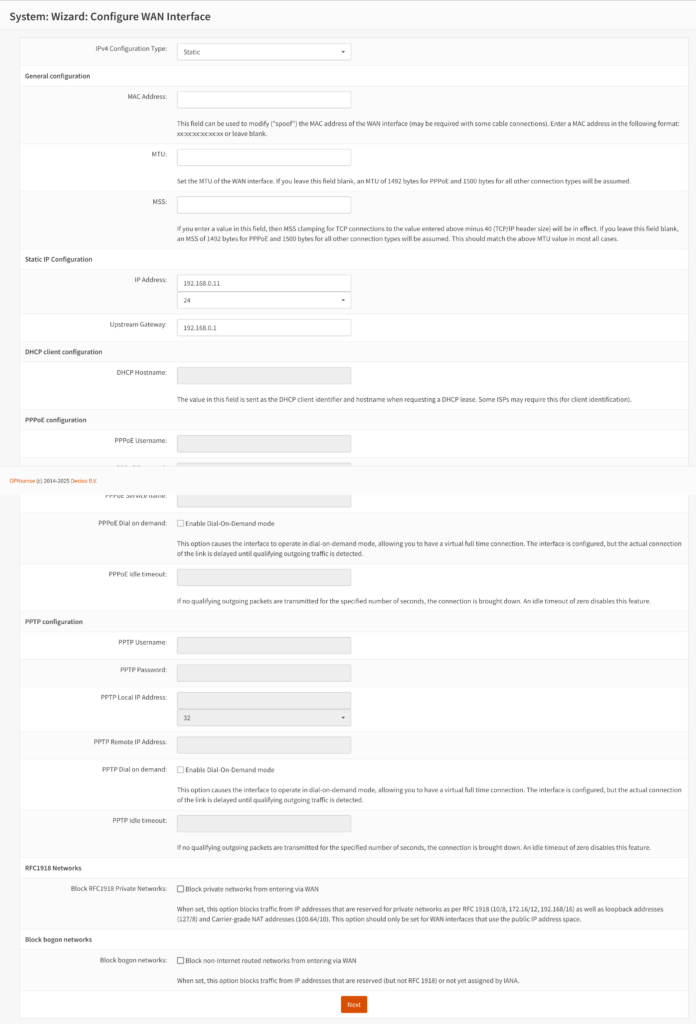

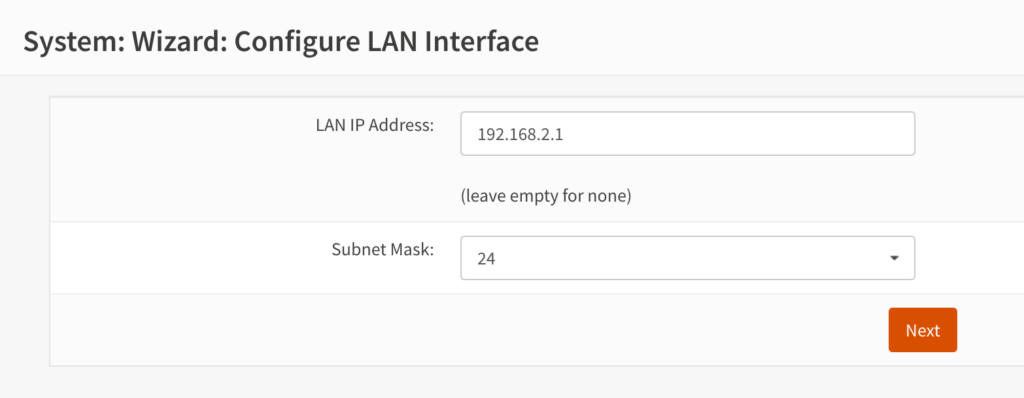

I started with a fresh installation of OPNsense. So ymmv, if you start with some existing configuration. I enabled the ‘Automatic outbound NAT for Reflection’ option in Firewall > Settings > Advanced. This is necessary for the automatic masquerading of the source address while performing the redirect, since the default for outbound NAT in OPNsense is ‘Automatic outbound NAT rule generation (no manual rules can be used)’.

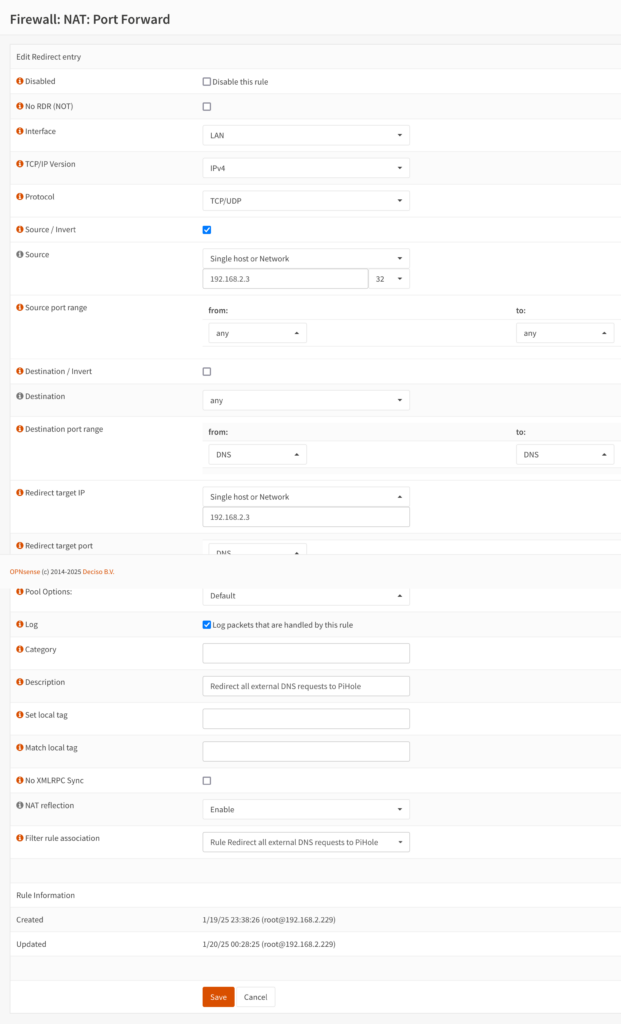

Then I went to Firewall > NAT > Port Forward and clicked on the + icon to add a new rule that looks like the below screenshot.

Here are the relevant settings that I changed before saving the rule.

Interface: LANTCP/IP Version: IPv4Protocol: TCP/UDPSource/Invert: (checked)Source: Single host or Network, 192.168.2.3/32(this is the IP address of my Pi-hole instance)Redirect target port: DNS(I apologize for the screenshot partially hiding this)Description: Redirect all external DNS requests to PiHoleNAT Reflection: EnableFilter rule association: Add associated filter rule

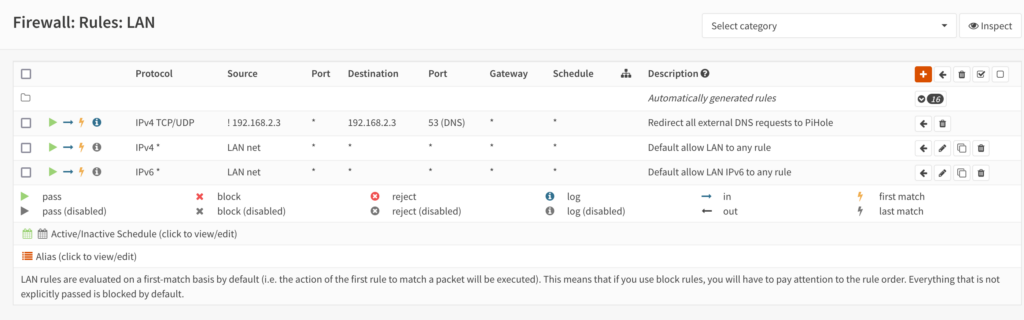

Then I went to the Firewall > Rules > LAN page and moved the custom associated filter rule to the top of the rule list so that it looks similar to the below screenshot.

Now, when I queried the DNS record for a domain using dig, it got a response from my Pi-hole instance. When I queried Google DNS for a blocked-in-my-Pi-hole domain using a command like dig blocked-domain.com @8.8.8.8, it got redirected to the Pi-hole DNS server and received a 0.0.0.0 address in the response as if it was from the Google DNS resolver, 8.8.8.8. So it all worked! 🎉

Bonus reading: https://labzilla.io/blog/force-dns-pihole. This talks about doing a similar thing using pfSense, which OPNsense is a fork of and has slightly different rules starting from a different baseline to achieve a similar result. It also explains a way to block all outgoing DNS-over-HTTPS (DoH) using pfSense. The linked Hacker News thread also has insightful comments on this topic.